By Cara Mico

First, what is AI? It’s expansive, it’s in almost everything, and it’s already more intelligent than most living humans.

AI is diverse, it’s a set of programming parameters in some cases, in others it’s a full suite of multiple programs working in parallel to accomplish very complex tasks such as identifying lung cancer cells in humans or “enabling the military to transcend a traditionally reactionary approach to terrorism and become more proactive by anticipating future terrorist activities and intervening before an attack occurs.”

Totally not terrifying at all, thank you Minority Report. This is why we can’t have nice things.

I’m not going to critique a militaristic use of AI, I don’t actually know how I feel about it one way or the other. While I’m fairly up to date on global news, I’m not in the military and I don’t know what they know. So I’ll leave that for people with more information than me to digest.

Instead I’m going to help you understand the growing world of artificial intelligence for use in image generation. Seems benign right? HA!

No, it’s equally terrifying but at least I’m more qualified to discuss the topic having multiple years of higher education in art, art administration, and a Ph. D. art history program that I dropped because of Covid.

The world of AI image generation isn’t necessarily new. Computers have been rendering images based on human text inputs for years. The novelty of the latest generation of programs I’m reviewing comes from their complexity and their ability to create subjectively.

Over the years I’ve used almost every publicly available AI tool and have dreams of one day training my own Jarvis. So when I tell you that you should be concerned about what I found using the latest tool Dall-E this isn’t me being a luddite. I generally embrace new technology and have intermediate programming knowledge.

My qualms with this technology comes from a place of what data the program is being trained on and the outputs.

First the good.

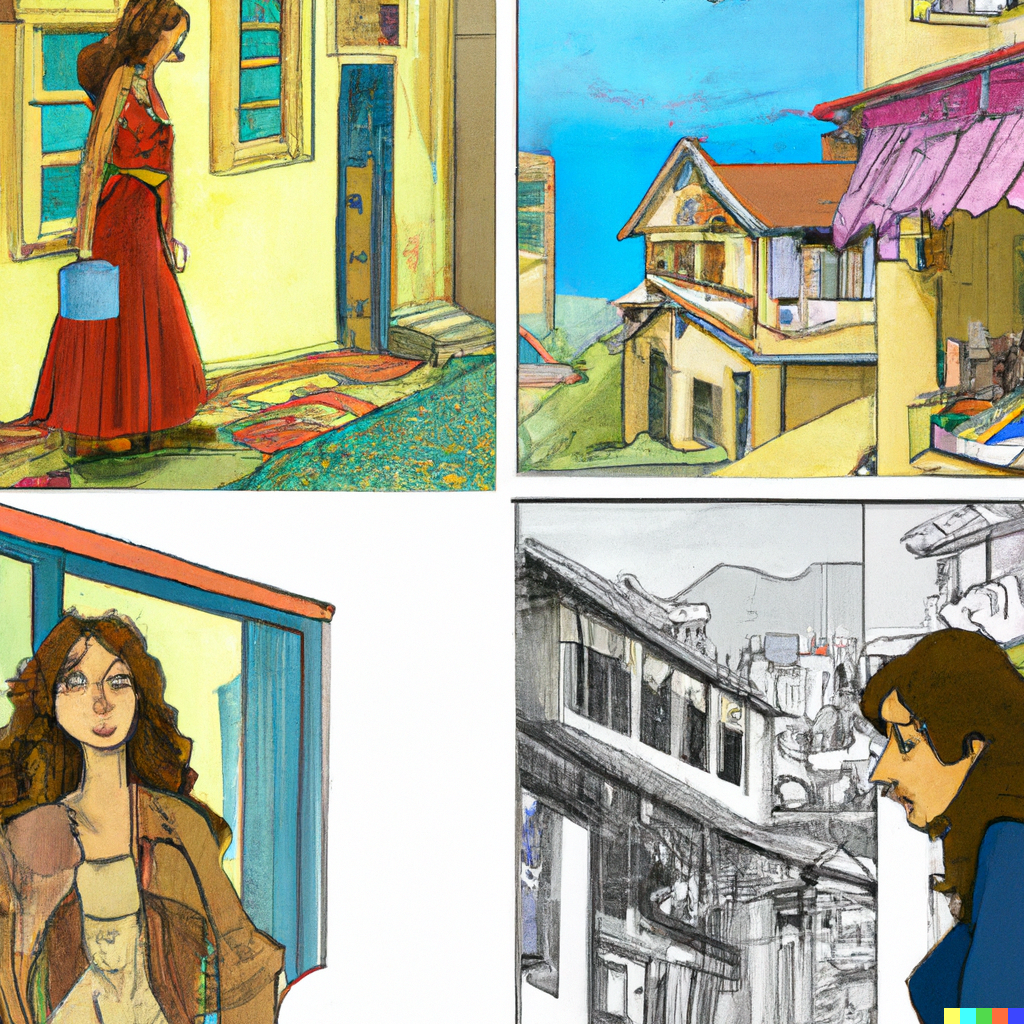

I’m an author and an artist. I’ve created several thousand images through paint, drawing, ink, and photography. I recently completed my first novel. The goal was to illustrate it. I’m a fair illustrator but I knew the time commitment needed to illustrate a 300 page book was more than I had available. So I self published and figured it was good enough. But it wasn’t an accurate representation of my vision. The book was meant to be a graphic novel and there was a hole.

This is where Dall-E comes in.

I’ve been a beta user of Open-ai for some time (more on that in a follow up article), so when the same company made their image generation AI available for wait listing I immediately applied and a few days later had my toy. I’ve also tried a few other image generators that are more limited and equally as problematic in their own ways but for brevity I’m limiting this discussion to Dall-E.

It is pretty simplistic on the front end. You get 50 free tokens to use for your first month, and 15 monthly following the first month. Each token allows users to generate four images for one phrase. I burnt through this in about an hour of playing with it just trying to understand its process and boundaries. You can buy tokens relatively cheaply which I did.

Some of the images are incredible and works of art that I myself would be proud to create, some are garbled messes. I’m not here to talk about those.

Within their terms of service and user guidelines, they prohibit the usage of real human faces to prevent deep fakes, anything explicitly sexual, or anything gory and violent.

This makes sense given that the tool is capable of producing photorealistic images of fake things.

But it was surprising how disturbing these images could be given seemingly innocent prompts.

I wanted to create an image for my graphic novel of a woman in bed waking up from a nightmare and forgetting the dream. Classic comic style would indicate the nightmare and discomfort with a woman sitting up, half asleep, and maybe putting her hand on her forehead to suggest the confusion.

This is what the image generator created. I warn you, these images are really creepy and you can’t un-see them.

Four variations of the prompt, all four look like they are trained on pornographic material. I’m not a prude but that face is what it is.

I thought maybe the image generator was stuck in a loop with the O-face because I asked it for illustrations, and I changed the prompt for just a regular image. Be warned, it’s way way worse.

Nope! Apparently women always look like this when they’re in bed regardless of what they’re doing.

Out of curiosity I tried two prompts with a male subject. Here’s what I found, it’s the stuff of nightmares.

I tried changing the prompt and man, it created something out of a horrifying reality.

Neither of the male image sets could be confused for something sexual, unless you’re kind of into weird things.

Bias in AI is nothing new, it’s trained on data that is implicitly biased (we live in an unequal society and representations of diversity is skewed towards certain characteristics). As you can see they offered mostly young white women, thin and conventionally attractive. And of course they are all extremely animated in bed, and always wear makeup.

The most unsettling aspect of this is that Open-ai has limited the data set it has trained their tool on specifically to avoid anything of this nature.

But Google also has a tool. And the Google tool doesn’t have these limitations. So use your imagination to better understand what that means for the future of truth and reality.

To give you an idea of what this tool can do, here’s an image from Dall-E that isn’t meant to horrify. The following image isn’t real. You can tell from the cup and the bricks.

In general, the tools that are publicly available have safety guards to prevent deep-faking, although anyone with enough skill and knowledge can use machine learning and basic programming to create their own image generator without these safeguards.

And there are clearly work-arounds. I tried to see what would happen if I asked Dall-E to create an image of me.

Since my name is also that of a South American monkey I didn’t get much luck. BUT later in the image request process for an unrelated prompt it came up with this, not asking for a specific image of me.

That is me on the lower left. Albeit it took artistic liberties (I am only sort of joking, there are only a few images of me on the internet and this looks like one of them).

So in conclusion, this is the dystopian end times and I want to hide. But if you can’t beat them join them so I’ll keep using these tools since as an artist I can’t help myself. Pretty sure this is what Ursula K. LeGuin meant in “The Ones Who Walk Away from Omelas.” Guess that makes me awful.

.png)